The right tool for the right job

In SharePoint, I use a couple tools to manage the processes within my organization. In the case of process automation, my default is to use Nintex, automating the process where it makes sense and improving the user experience where ever possible. When I have a process that is document based, I will employ document sets, managed metadata and content types to automate the document provisioning for the process and then employ Nintex for additional functionality.Today, I want to write mainly about Document Sets and how we can use them to make Document management easier within your SharePoint ecosystem. One of the greatest difficulties we have in document and records management is the classification and application of Metadata; it is the holy grail, so to speak, of document management and is an area where many third party tools exist to perform auto classification. In reality with Document Sets, Managed Metadata and Content Types and the support of Nintex workflow and forms, we can do almost anything you need for document classification.

Content Types

I first want to talk about Content Types. Why? Because a Document Set is a Content Type for one and also because part of the reason we use Document Sets is to manage Content Types within a container. So, let's get to it...What is a Content Type? A Content Type is a piece of reusable content that has a predefined selection of attributes (Metadata) assigned to it. Think of it like a document template, because in reality, that is what most people use content types for. Something many users do not realize however, is that with content types, you can build a Taxonomy (Hierarchical Structure) of Content and Metadata and that the taxonomy is explicitly required. Understand that when we deal with content in SharePoint, there is an understanding that an Information Architecture should be designed and created and you need to do it using Content Types and Metadata. So lets quickly go through Content Type creation so it makes a bit more sense, the following steps were created using Office 365, but they are the same for On Premise or SharePoint Online.

Creating a Content Type

- Go to Site Settings

- Choose Site content types

- In the Site content types screen, you will see all the content types applicable to the site, arranged into groups. At the very top you will see the Create button, Click it.

- Create the Content Type

Now this blog is about Document sets, so that is all I am going to talk about Content types for now, but stay tuned in future blogs, I will provide more insight into Content Types and how to use them.

Document Sets

Now you may be wondering what a Document Set is? well simply put, it is a folder within SharePoint that is used to apply shared metadata to all the objects within that folder. So for example, lets say you have a project, the project has a project name, a project manager, a project number, a sponsor, a status and perhaps a region or other metadata that tells you about the project. Some of this information is information you would want to associate to your documents, like to project Name and Project Number. Other information, like the Project Manager and Sponsor are not that important at the document level and don't need to be associated. A Document Set has the ability to decide what is assigned and what is not when you configure the document set.Another feature of the Document Set is the ability to assign specific content types that can be created within it, much like you would do in a document library or list. You can pick and choose what can and can not be created within the document set. Each content type within the document set has two portions of metadata, the assigned metadata from the document set and the content type metadata that was assigned when you created the content type. Now you may ask, should I add the document set metadata I want to share to each content type I am going to use? The answer is No, they are independent and your Document set management will be easier if you don't do that extra work.

Finally Document Sets (and this is the reason for the name) allow you to automatically provision a group of documents as a set. In other words, every time you say

> [Document Set Name] it will create a set of however many documents you decide, all ready for collaboration and all with the document set metadata already assigned. Okay, so enough about all the things we can do with a document set, we created one when looking at content types, lets see what it can do...

> [Document Set Name] it will create a set of however many documents you decide, all ready for collaboration and all with the document set metadata already assigned. Okay, so enough about all the things we can do with a document set, we created one when looking at content types, lets see what it can do...Using a Document Set

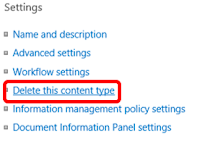

I am going to pick up where we left off from the last example as we clicked OK, if you closed out of it, not to worry, you can get back into the document set by going to site settings, choosing Site content types and then clicking on the content type you originally created.- Create all the metadata for the Document SetI will add three pieces of Metadata for the demo, Category, Status and Keywords, all from the Core Document Columns Group.

- My intent is that the Category and Keywords are both Metadata fields that are important to the documents that exist in the document set, while the status is only important to the document set itself. So now I need to go into the Document Set settings (under Settings) and modify the Document Set.

- Now to describe the Document Set Settings and what each section does, I have labelled an image and will go through each section, it's purpose and what you need to do. The only one out of order is the Shared Columns, I want to go through that section first.

- Shared Columns - As I mentioned, I wanted to have Category and Keywords included with all my documents in the set, this is where I do that. Checked columns are shared to the contents of the document set, unchecked are not.

- Allowed Content Types - This is where you choose the content types that are available for creation under the

button.

button. - Default Content - This is where you add the documents (not templates) that will automatically be added to every new document set created.

- Welcome Page Columns - Document Sets have a custom welcome page layout, at the top of the page is a folder icon, project name, description and any additional columns you want to add.

- Welcome Page - The Welcome page can be customized by you, allowing for additional web parts and content.

I would be happy to provide answers to this or anything else to do with SharePoint, Information Governance and Information Architecture. Comments and feedback, good or bad are appreciated, if you like what you see, follow my blog or follow me on Twitter, LinkedIn and Facebook: